Annotation Pipeline

Overview

After running SharkTrack on your videos, you’ll do two things: review the output and then compute MaxN.

- Review (clean + label) — Delete false detections and label true detections with a species ID.

- Compute MaxN — Generate species-specific MaxN metrics from your reviewed output.

Understand the Output

TL;DR: SharkTrack groups consecutive detections of the same animal into a track (with an ID). For each track, it saves one screenshot (

.jpg) that you can review quickly. Your labels (via filenames) are then used to compute MaxN.

Output folder structure

Locate your output directory (default: ./output). It contains:

| File / Folder | Description |

|---|---|

internal_results/output.csv | Raw detections (per frame/time) |

internal_results/overview.csv | Summary per video (number of tracks found) |

internal_results/*/ | One subfolder per video |

internal_results/*/<track_id>.jpg | One screenshot per track (this is what you review) |

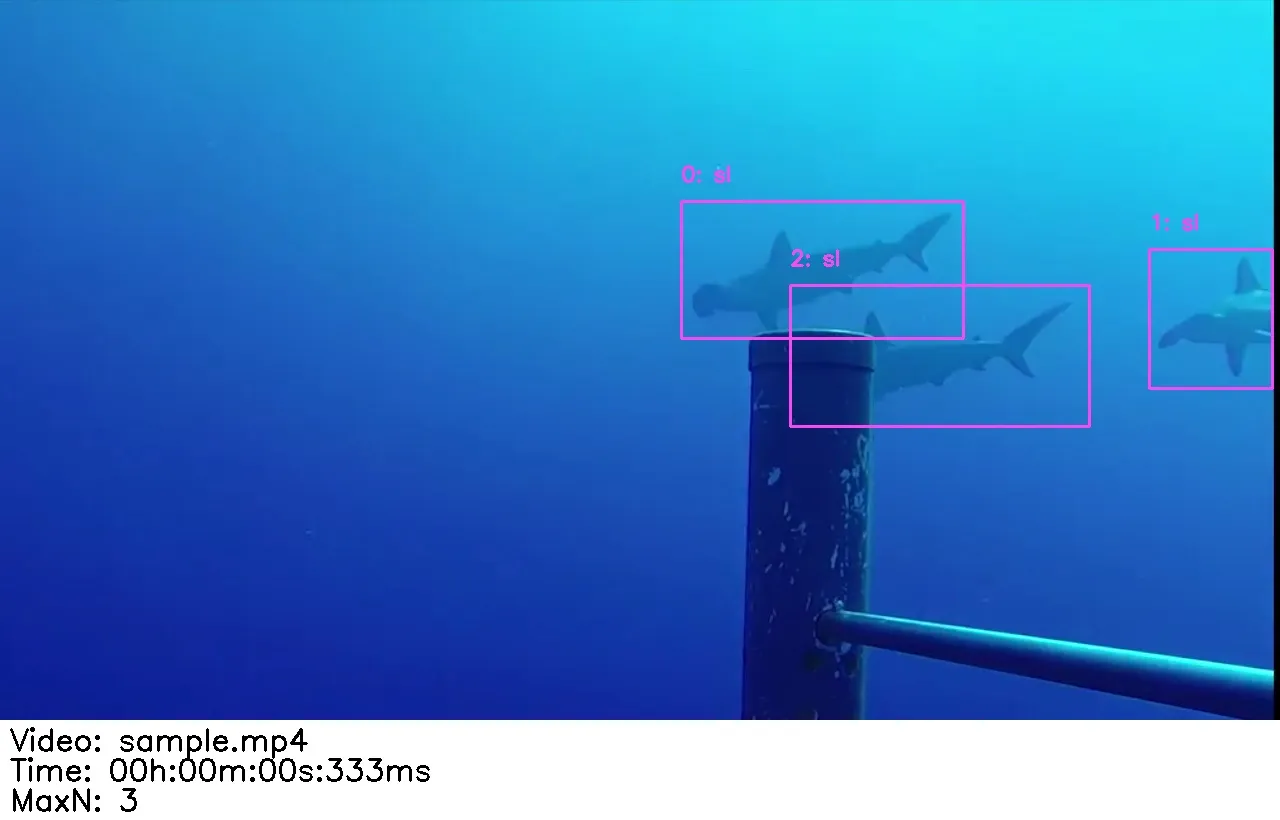

How to read a detection screenshot

In each video folder you will see one screenshot per track. The screenshot shows a bounding box around the shark/ray you should label.

The screenshot also shows the video file and timestamp, so you can refer back to the original footage when you’re unsure.

Step 1: Review (Clean + Label)

- For each video, open its output subfolder. It contains detection files named

{track_id}.jpg. - Scroll through the images and focus on the animal inside the bounding box.

- If it’s not a shark/ray, delete the file.

- If it is a shark/ray, rename the file from

{track_id}.jpgto{track_id}-{species}.jpg.

Example: 5.jpg → 5-great_hammerhead.jpg

FAQ

What is a track?

The same elasmobranch appears in multiple consecutive frames. A track is SharkTrack’s “this is the same animal I saw before” group, with an ID.

Why are there multiple animals in the screenshot, but only one bounding box?

SharkTrack saves one screenshot per track, so you will always see only one bounding box. Only label the animal in the bounding box.

Why does this matter?

You only need to label one screenshot per track instead of thousands of frames. The script applies your label to all detections in that track when computing MaxN.

One frame isn’t enough to determine species

Each detection image shows the video name/path and timestamp, so you can go back to the original video to confirm the species.

I see the same elasmobranch in multiple detections

The model may split the same shark into two or more consecutive tracks. Classify all of them — this won’t affect MaxN accuracy, it just requires classifying more images.

Pro Tips

- Do a first pass to remove wrong detections, then assign species labels in a second pass.

- Unsure about species/validity? Check the text at the bottom of the detection image for the video name and timestamp, then review the original video.

- Windows shortcuts:

F2to rename,Ctrl+Dto delete. - macOS shortcuts: Gallery view,

Cmd+Deleteto remove,Enterto rename.

Collaborating

Want multiple users to annotate? Upload the entire output folder to Google Drive, Dropbox, or OneDrive and perform the cleaning steps there!

Step 2: Compute MaxN

Once you have reviewed all track screenshots, it’s time to generate MaxN.

- Open Terminal at the

sharktrackfolder (same as when running the model in the User Guide) - Activate the virtual environment:

Anaconda:

conda activate sharktrackWindows:

venv\Scripts\activate.batmacOS / Linux:

source venv/bin/activate- Run the MaxN computation:

python utils/compute_maxn.pyYou will be asked for:

- the path to your output folder (the one you just reviewed)

- the path to the folder containing the original videos (optional, used to compute visualisations)

You will see a new folder called

analysed, with themaxn.csvfile, as well as subfolders showing you the visualisations of MaxN per each video.

Need Help?

- Issues: Submit on GitHub

- Questions: Email us

- Contributions: Pull requests, issues, or suggestions welcome — email